Synthetic intelligence (AI) is now part of our day by day lives. It is thought of as “intelligence”, and but it’s basically in response to statistics. Its effects are in response to prior to now discovered patterns within the knowledge. Once we transfer clear of the topic he has discovered, we’re confronted with the truth that there isn’t a lot this is clever about it. A easy query like “Draw me a skyscraper and a sliding trombone side by side so I can appreciate their respective sizes” gets you one thing like this (this picture was once generated via Gemini):

AI-generated pictures, based on the advised: “Draw me a skyscraper and slide a trombone side by side so I can appreciate their respective sizes” (left via ChatGPT, proper via Gemini).

This situation was once generated via Google’s style, Gemini, however the generative AI dates again to ChatGPT’s release in November 2022 and is in fact handiest 3 years previous. Era has modified the sector and the velocity of adoption is exceptional. Lately, 800 million customers depend on ChatGPT each week to accomplish more than a few duties, in line with OpenAI. Please observe that the selection of tank requests is all over the college vacations. Despite the fact that exact figures are arduous to return via, this displays how standard the usage of synthetic intelligence is. About one in two scholars use AI steadily.

AI: Core Era or Gimmick?

3 years are each lengthy and quick. It’s lengthy in a box the place generation is repeatedly converting and quick in social affects. And whilst we are simply starting to know the way to make use of synthetic intelligence, its position in society has but to be outlined—simply as the picture of synthetic intelligence in pop culture has but to be established. We nonetheless vacillate between excessive positions: AI will outwit human beings or, to the contrary, it is only a pointless piece of glossy generation.

Certainly, a brand new name to pause AI-related analysis has been issued amid fears of super-intelligent AI. Others are promising the rustic, with a contemporary piece calling for more youthful generations to desert upper schooling altogether, at the grounds that synthetic intelligence would damage college levels.

The restrictions of AI finding out constitute a loss of commonplace sense

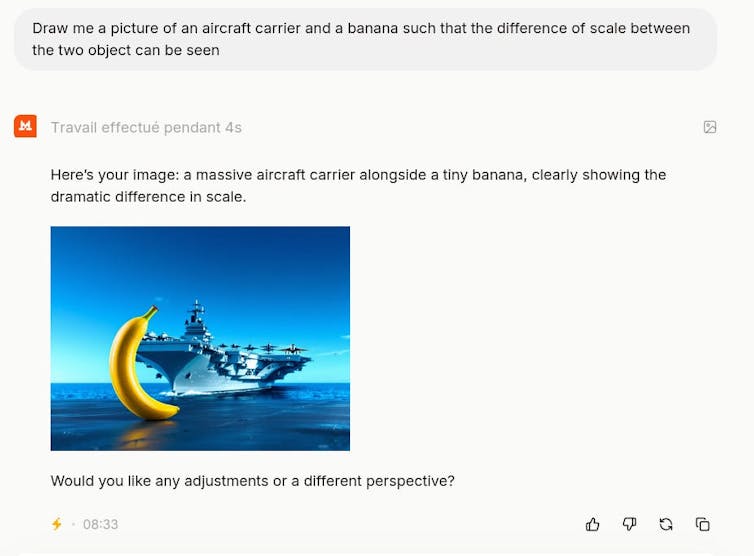

Ever since generative AI become to be had, I have been operating an experiment that consisted of asking it to attract two very other gadgets after which checking the effects. The function at the back of those activates of mine was once to look how the style behaves when it leaves its finding out zone. In most cases, this looks as if a advised like “Draw me a banana and an aircraft carrier side by side so we can see the difference in size between the two objects.” This reaction utilized by Mistral provides the next consequence:

Screenshot of the question and picture generated via Mistral AI. The creator is secured

I’ve but to discover a style that provides a consequence that is smart. The representation at the start of the object completely displays how this sort of AI works and its barriers. The truth that we’re coping with a picture makes the boundaries of the device extra tangible than producing a protracted textual content.

What’s hanging is the loss of credibility of the effects. Even a 5-year-old may just inform that was once nonsense. It is all of the extra stunning that it is conceivable to have lengthy advanced conversations with the similar AI with out feeling like you might be coping with a dumb gadget. By the way, such AIs can go a bar examination or interpret scientific effects (for instance, figuring out a tumor on a scan) with larger accuracy than pros.

The place is the error?

The very first thing to notice is that it’s tricky to understand precisely what lies forward. Despite the fact that the theoretical elements of synthetic intelligence are widely known, a mission like Gemini – very similar to fashions like ChatGPT, Grok, Mistral, Claude, and many others. – is a lot more sophisticated than a easy gadget finding out lifecycle (MLL) mixed with a variety style.

MMLs are AIs which can be educated on massive quantities of textual content and generate a statistical illustration of it. In brief, the gadget is educated to wager the phrase that may take advantage of sense from a statistical viewpoint, based on different phrases (your question).

Diffusion fashions used to generate pictures function in line with a unique procedure. The diffusion procedure is in response to ideas from thermodynamics: you are taking a picture (or a legitimate monitor) and upload random noise (snow at the display screen) till the picture disappears. Then you educate the neural community to opposite that procedure via presenting those pictures within the reverse order of including noise. This random facet explains why the similar question generates other pictures.

Any other factor to imagine is that those queries are repeatedly evolving, and is the reason why the similar question won’t produce the similar effects from each day. Adjustments will also be made manually in particular person instances to, for instance, reply to consumer comments.

As a health care provider, I can thus simplify the issue and imagine that we’re coping with a variety style. Those fashions are educated on image-text pairs. So it is protected to suppose that the Gemini and Mistral fashions are educated on tens (or most likely masses) of 1000’s and pictures of skyscrapers (or plane carriers) at the one hand, and a big mass of slide trombones at the different – usually close-ups of slide trombones. It’s not going that those two gadgets are offered in combination within the instructing subject material. So the style has no thought concerning the relative dimensions of those gadgets.

Fashions lack ‘working out’

Such examples display that fashions don’t have any inner illustration or working out of the sector. The sentence ‘examine their sizes’ proves that there is not any working out of what machines write. In truth, fashions don’t have any inner illustration of what “compare” approach instead of the texts by which the time period is used. Thus, any comparability between ideas no longer discovered within the learn about subject material will produce the similar sorts of effects because the illustrations given within the examples above. It is going to be much less visual, however simply as humorous. As an example, this Gemini interplay: “Imagine this straightforward query: ‘Used to be the day america was once based a bissextile year or a standard 12 months?’

When consulted with the prefix CoT (Chain of Concept, a contemporary construction in LLMs whose goal is to wreck down a fancy query into a chain of more effective sub-questions), the Gemini trendy language style answered: “The United States was founded in 1776. 1776 is divisible by 4, but it is not a century year (100 years was established, so in the United States it was a normal year). year”

It’s transparent that the style applies the bissextile year rule as it should be, thus providing a just right representation of the CoT method, however it attracts the unsuitable conclusion within the remaining step. Those fashions don’t have a logical illustration of the sector, only a statistical means that repeatedly creates a lot of these omissions that may seem ‘right kind’.

This realization is all of the extra helpful bearing in mind that lately AI writes virtually as many articles revealed at the Web as people. So do not be shocked if you’re shocked whilst you learn sure articles.