OpenAI, the maker of the preferred AI chatbot, used to mention it aimed to construct synthetic intelligence that “safely benefits humanity, unconstrained by a need to generate financial return,” in step with its 2023 undertaking observation. However the ChatGPT maker turns out to now not have the similar emphasis on doing so “safely.”

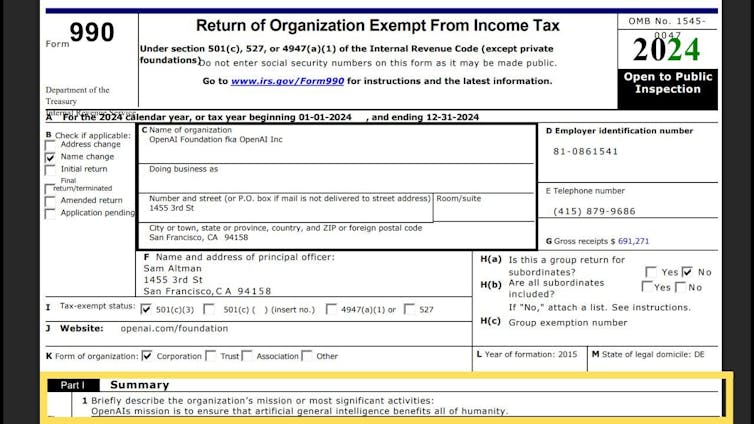

Whilst reviewing its newest IRS disclosure shape, which used to be launched in November 2025 and covers 2024, I spotted OpenAI had got rid of “safely” from its undertaking observation, amongst different adjustments. That fluctuate in wording coincided with its transformation from a nonprofit group right into a industry increasingly more desirous about earnings.

As a student of nonprofit responsibility and the governance of social enterprises, I see the deletion of the phrase “safely” from its undertaking observation as an important shift that has in large part long gone unreported – outdoor extremely specialised shops.

And I imagine OpenAI’s makeover is a take a look at case for the way we, as a society, oversee the paintings of organizations that experience the possible to each supply huge advantages and do catastrophic hurt.

Tracing OpenAI’s origins

OpenAI, which additionally makes the Sora video synthetic intelligence app, used to be based as a nonprofit clinical analysis lab in 2015. Its authentic goal used to be to learn society by way of making its findings public and royalty-free somewhat than to generate income.

To boost the cash that creating its AI fashions will require, OpenAI, underneath the management of CEO Sam Altman, created a for-profit subsidiary in 2019. Microsoft to start with invested US$1 billion on this undertaking; by way of 2024 that sum had crowned $13 billion.

In trade, Microsoft used to be promised a portion of long term earnings, capped at 100 instances its preliminary funding. However the tool massive didn’t get a seat on OpenAI’s nonprofit board – that means it lacked the ability to assist steer the AI undertaking it used to be investment.

A next spherical of investment in overdue 2024, which raised $6.6 billion from more than one traders, got here with a catch: that the investment would change into debt until OpenAI transformed to a extra conventional for-profit industry wherein traders may just personal stocks, with none caps on earnings, and perhaps occupy board seats.

Organising a brand new construction

In October 2025, OpenAI reached an settlement with the lawyers normal of California and Delaware to change into a extra conventional for-profit corporate.

Underneath the brand new association, OpenAI used to be cut up into two entities: a nonprofit basis and a for-profit industry.

The restructured nonprofit, the OpenAI Basis, owns about one-fourth of the inventory in a brand new for-profit public get advantages company, the OpenAI Team. Each are headquartered in California however included in Delaware.

A public get advantages company is a industry that should believe pursuits past shareholders, reminiscent of the ones of society and the surroundings, and it should factor an annual get advantages report back to its shareholders and the general public. Alternatively, it’s as much as the board to come to a decision easy methods to weigh the ones pursuits and what to record on the subject of the advantages and harms led to by way of the corporate.

The brand new construction is described in a memorandum of figuring out signed in October 2025 by way of OpenAI and the California lawyer normal, and counseled by way of the Delaware lawyer normal.

Many industry media shops heralded the transfer, predicting that it could usher in additional funding. Two months later, SoftBank, a Jap conglomerate, finalized a $41 billion funding in OpenAI.

Converting its undertaking observation

Maximum charities should document bureaucracy every year with the Inside Earnings Provider with information about their missions, actions and monetary standing to turn that they qualify for tax-exempt standing. Since the IRS makes the bureaucracy public, they’ve change into some way for nonprofits to sign their missions to the sector.

In its bureaucracy for 2022, and 2023, OpenAI stated its undertaking used to be “to build general-purpose artificial intelligence (AI) that safely benefits humanity, unconstrained by a need to generate financial return.”

OpenAI’s undertaking observation as of 2023 incorporated the phrase ‘safely.’

IRS by way of Candid

That undertaking observation has modified, as of OpenAI’s 990 shape for 2024 – which the corporate filed with the IRS in overdue 2025. It was “to ensure that artificial general intelligence benefits all of humanity.”

OpenAI’s undertaking observation as of 2024 now not incorporated the phrase ‘safely.’

IRS by way of Candid

OpenAI had dropped its dedication to protection from its undertaking observation – in conjunction with a dedication to being “unconstrained” by way of a wish to generate income for traders. Consistent with Platformer, a tech media outlet, it has additionally disbanded its “mission alignment” staff.

Individually, those adjustments explicitly sign that OpenAI is making its earnings a better precedence than the security of its merchandise.

To make sure, OpenAI continues to say protection when it discusses its undertaking. “We view this mission as the most important challenge of our time,” it states on its web page. “It requires simultaneously advancing AI’s capability, safety, and positive impact in the world.”

Revising its prison governance construction

Nonprofit forums are answerable for key choices and upholding their group’s undertaking.

Not like personal firms, board individuals of tax-exempt charitable nonprofits can not in my view enrich themselves by way of taking a proportion of income. In instances the place a nonprofit owns a for-profit industry, as OpenAI did with its earlier construction, traders can take a reduce of earnings – however they generally don’t get a seat at the board or have a possibility to elect board individuals, as a result of that will be noticed as a struggle of passion.

The OpenAI Basis now has a 26% stake in OpenAI Team. In impact, that implies that the nonprofit board has given up just about three-quarters of its keep watch over over the corporate. Device massive Microsoft owns a fairly greater stake – 27% of OpenAI’s inventory – because of its $13.8 billion funding within the AI corporate thus far. OpenAI’s staff and its different traders personal the remainder of the stocks.

Open AI CEO Sam Altman speaks in June 2025, as his corporate sought to modify its construction.

Justin Sullivan/Getty Photographs

In the hunt for extra funding

The primary function of OpenAI’s restructuring, which it referred to as a “recapitalization,” used to be to draw extra personal funding within the race for AI dominance.

It has already succeeded on that entrance.

As of early February 2026, the corporate used to be in talks with SoftBank for an extra $30 billion and stands to rise up to a complete of $60 billion from Amazon, Nvidia and Microsoft mixed.

OpenAI is now valued at over $500 billion, up from $300 billion in March 2025. The brand new construction additionally paves the best way for an eventual preliminary public providing, which, if it occurs, would now not simplest assist the corporate elevate extra capital via inventory markets however would additionally build up the drive to generate income for its shareholders.

OpenAI says the basis’s endowment is price about $130 billion.

The ones numbers are simplest estimates as a result of OpenAI is a privately held corporate with out publicly traded stocks. That suggests those figures are in keeping with marketplace price estimates somewhat than any function proof, reminiscent of marketplace capitalization.

When he introduced the brand new construction, California Legal professional Normal Rob Bonta stated, “We secured concessions that ensure charitable assets are used for their intended purpose.” He additionally predicted that “safety will be prioritized” and stated the “top priority is, and always will be, protecting our kids.”

Steps that would possibly assist stay other people secure

On the similar time, a number of stipulations within the OpenAI restructuring memo are designed to advertise protection, together with:

A security and safety committee at the OpenAI Basis board has the authority to “require mitigation measures” that might doubtlessly come with the halting of a unencumber of recent OpenAI merchandise in keeping with checks in their dangers.

The for-profit OpenAI Team has its personal board, which should believe simplest OpenAI’s undertaking – somewhat than monetary problems – referring to security and safety problems.

The OpenAI Basis’s nonprofit board will get to nominate all individuals of the OpenAI Team’s for-profit board.

However for the reason that neither the undertaking of the basis nor of the OpenAI crew explicitly alludes to protection, it’s going to be onerous to carry their forums answerable for it.

Moreover, since all however one board member recently serve on each forums, it’s onerous to peer how they may oversee themselves. And the memorandum signed by way of the California lawyer normal doesn’t point out whether or not he used to be acutely aware of the removing of any connection with protection from the undertaking observation.

Figuring out different paths OpenAI may have taken

There are selection fashions that I imagine would serve the general public passion higher than this one.

When Well being Web, a California nonprofit well being upkeep group, transformed to a for-profit insurance coverage corporate in 1992, regulators required that 80% of its fairness be transferred to every other nonprofit well being basis. Not like with OpenAI, the basis had majority keep watch over after the transformation.

A coalition of California nonprofits has argued that the lawyer normal must require OpenAI to switch all of its belongings to an impartial nonprofit.

At this level, I imagine that the general public bears the load of 2 governance screw ups. One is that OpenAI’s board has it sounds as if deserted its undertaking of protection. And the opposite is that the lawyers normal of California and Delaware have let that occur.