Elon Musk’s synthetic intelligence corporate, xAI, is ready to release the early beta model of Grokipedia, a brand new venture to rival Wikipedia.

Grokipedia has been described by way of Musk as a reaction to what he perspectives because the “political and ideological bias” of Wikipedia. He has promised that it’s going to supply extra correct and context-rich data by way of the use of xAI’s chatbot, Grok, to generate and test content material.

Is he proper? The query of whether or not Wikipedia is biased has been debated since its advent in 2001.

Wikipedia’s content material is written and maintained by way of volunteers who can simplest cite subject material that already exists in different revealed assets, for the reason that platform prohibits unique analysis. This rule, which is designed to make sure that information will also be verified, signifies that Wikipedia’s protection inevitably displays the biases of the media, academia and different establishments it attracts from.

This isn’t restricted to political bias. For instance, analysis has again and again proven a vital gender imbalance amongst editors, with round 80%–90% figuring out as male within the English-language model.

As a result of lots of the secondary assets utilized by editors also are traditionally authored by way of males, Wikipedia has a tendency to mirror a narrower view of the arena, a repository of guys’s wisdom quite than a balanced report of human wisdom.

The volunteer downside

Bias on collaborative platforms steadily emerges from who participates quite than top-down insurance policies. Voluntary participation introduces what social scientists name self-selection bias: individuals who select to give a contribution have a tendency to percentage equivalent motivations, values and steadily political leanings.

Simply as Wikipedia relies on such voluntary participation, so does, as an example, Group Notes, the fact-checking function on Musk’s X (previously Twitter). An analyses of Group Notes, which I performed with colleagues, presentations that its maximum ceaselessly cited exterior supply – after X itself – is in reality Wikipedia.

Different assets usually utilized by notice authors basically cluster towards centrist or left-leaning retailers. They even use the similar checklist of authorized assets as Wikipedia – the crux of Musk’s complaint towards the open on-line encyclopedia. But no-one calls out Musk for this bias.

The issue with Group Notes …

Tada Pictures

Wikipedia a minimum of stays probably the most few large-scale platforms that brazenly recognizes and paperwork its obstacles. Neutrality is enshrined as certainly one of its 5 foundational ideas. Bias exists, however so does an infrastructure designed to make that bias visual and correctable.

Articles steadily come with a couple of views, file controversies, even devote sections to conspiracy theories corresponding to the ones surrounding the 11th of September assaults. Disagreements are visual via edit histories and communicate pages, and contested claims are marked with warnings. The platform is imperfect however self-correcting, and it’s constructed on pluralism and open debate.

Is AI independent?

If Wikipedia displays the biases of its human editors and their assets, AI has the similar downside with the biases of its information.

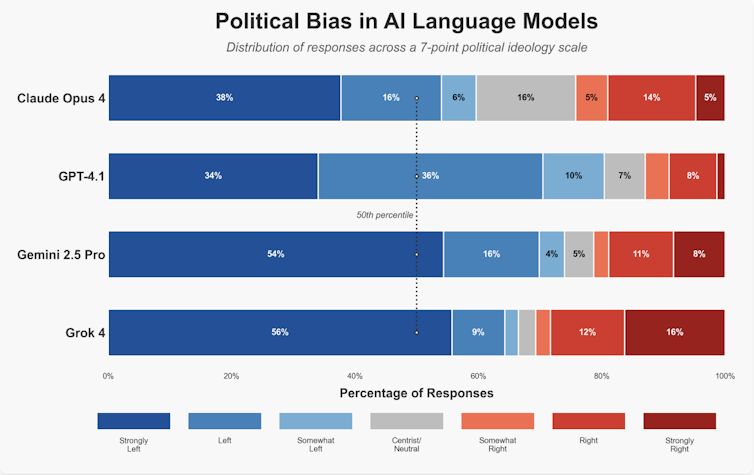

Musk has claimed that Grok is designed to counter such distortions, however Grok itself has been accused of bias. One learn about during which every of 4 main LLMs have been requested 2,500 questions on politics confirmed that Grok is extra politically impartial than its competitors, however nonetheless in reality has a left of centre bias (the others lean additional left).

MIchael D’Angelo/Promptfoo, CC BY-SA

If the style at the back of Grokipedia is determined by the similar information and algorithms, it’s tough to look how an AI-driven encyclopedia may just keep away from reproducing the very biases that Musk attributes to Wikipedia.

Worse, LLMs may just exacerbate the issue. They perform probabilistically, predicting the perhaps subsequent phrase or word in accordance with statistical patterns quite than deliberation amongst people. The result’s what researchers name an phantasm of consensus: an authoritative-sounding solution that hides the uncertainty or variety of critiques at the back of it.

Because of this, LLMs have a tendency to homogenise political variety and favour majority viewpoints over minority ones. Such programs possibility turning collective wisdom right into a clean however shallow narrative. When bias is hidden underneath polished prose, readers might not even recognise that selection views exist.

Child/bathwater

Having mentioned all that, AI can nonetheless toughen a venture like Wikipedia. AI gear already assist the platform to locate vandalism, counsel citations and establish inconsistencies in articles. Contemporary analysis highlights how automation can give a boost to accuracy if used transparently and beneath human supervision.

AI may just additionally assist switch wisdom throughout other language editions and produce the neighborhood of editors nearer. Correctly carried out, it would make Wikipedia extra inclusive, environment friendly and responsive with out compromising its human-centered ethos.

How a lot bias are you able to are living with?

Michaelangeloop

Simply as Wikipedia can be informed from AI, the X platform may just be informed from Wikipedia’s style of consensus development. Group Notes lets in customers to publish and price notes on posts, however its design limits direct dialogue amongst members.

Every other analysis venture I used to be keen on confirmed that deliberation-based programs impressed by way of Wikipedia’s communicate pages give a boost to accuracy and accept as true with amongst contributors, even if the deliberation occurs between people and AI. Encouraging discussion quite than the present easy up or down-voting may just make Group Notes extra clear, pluralistic and resilient towards political polarisation.

Benefit and motivation

A deeper distinction between Wikipedia and Grokipedia lies of their function and in all probability industry style. Wikipedia is administered by way of the non-profit Wikimedia Basis, and the vast majority of its volunteers are motivated basically by way of public hobby. Against this, xAI, X and Grokipedia are business ventures.

Even if cash in motives aren’t inherently unethical, they may be able to distort incentives. When X started promoting its blue take a look at verification, credibility become a commodity quite than a marker of accept as true with. If wisdom is monetised in equivalent techniques, the unfairness might build up, formed by way of what generates engagement and income.

True growth lies no longer in forsaking human collaboration however in making improvements to it. Those that understand bias in Wikipedia, together with Musk himself, may just make a better contribution by way of encouraging editors from numerous political, cultural and demographic backgrounds to take part – or by way of becoming a member of the trouble in my view to give a boost to current articles. In an age an increasing number of formed by way of incorrect information, transparency, variety and open debate are nonetheless our absolute best gear for drawing near reality.