Grokipedia, Elon Musk’s new venture, introduced on October 27, 2025, guarantees extra neutrality than Wikipedia. On the other hand, the AI fashions on which it’s founded stay marked by means of bias of their information.

On October 27, 2025, Elon Musk’s synthetic intelligence corporate, kAI, introduced a beta model of a brand new venture meant to compete with Wikipedia, Growikipedia. Musk items the latter as a substitute for what he sees as Wikipedia’s “political and ideological bias.” The entrepreneur guarantees that his platform will supply extra correct and higher contextualized knowledge due to Grok, kAI’s chatbot, which is able to generate and examine the content material.

Is he proper? The problem of Wikipedia’s bias has been debated since its introduction in 2001. Wikipedia’s content material is written and up to date by means of volunteers who can simplest cite resources that experience already been revealed, because the platform prohibits any unique analysis. This rule, which objectives to ensure fact-checking, implies that Wikipedia’s reporting inevitably displays the biases of the media, academia and different establishments on which it relies.

Those biases aren’t simply political. Thus, many research have proven a powerful gender imbalance amongst participants, with roughly 80% to 90% figuring out as male within the English model. As maximum secondary resources also are predominantly produced by means of males, Wikipedia has a tendency to replicate a narrower imaginative and prescient of the sector: a repository of male wisdom relatively than a real balanced landscape of human wisdom.

The issue of volunteering

On collaborative platforms, biases incessantly have much less to do with the principles than with the composition of the neighborhood. Voluntary participation introduces what social science calls “self-selection bias”: individuals who make a selection to give a contribution incessantly percentage equivalent motives, values, and on occasion political orientations.

Simply as Wikipedia is dependent upon this voluntary participation, so does Group Notes, Musk’s fact-checking device on X (previously Twitter). An research I performed with colleagues displays that her maximum cited exterior supply, after X himself, is in fact Wikipedia.

Different frequently used resources also are focused on centrist or left-leaning media. They use the similar record of “approved” resources as Wikipedia; which is to mention the very center of Musk’s complaint of the open on-line encyclopedia. On the other hand, no person blames Musk for this bias.

Representation of neighborhood notes. Then Photographs

Wikipedia stays no less than one of the vital few primary platforms that brazenly recognizes its barriers and paperwork them. The pursuit of neutrality is integrated as one of the vital 5 elementary rules. Biases exist, after all, however the infrastructure is designed to cause them to visual and correctable.

Articles incessantly characteristic a couple of issues of view, talk about controversies, or even commit complete sections to conspiracy theories, equivalent to the ones associated with the 9/11 assaults. Disagreements seem within the edit historical past and communicate pages, and disputed statements are flagged. The platform is imperfect, however self-regulating, according to pluralism and open debate.

Is synthetic intelligence impartial?

If Wikipedia displays the biases of its human participants and the resources they use, AI suffers from the similar coaching information downside. Grokipedia: Elon Musk is true that Wikipedia is biased, however its AI-based choice would possibly not do any higher

The Massive Language Fashions (LLM) utilized by Grok are skilled on massive corpora from the Web, equivalent to social networks, books, newspaper articles, and Wikipedia itself. Research have proven that those fashions reproduce the present biases—be they gender, political, or racial—provide of their coaching information.

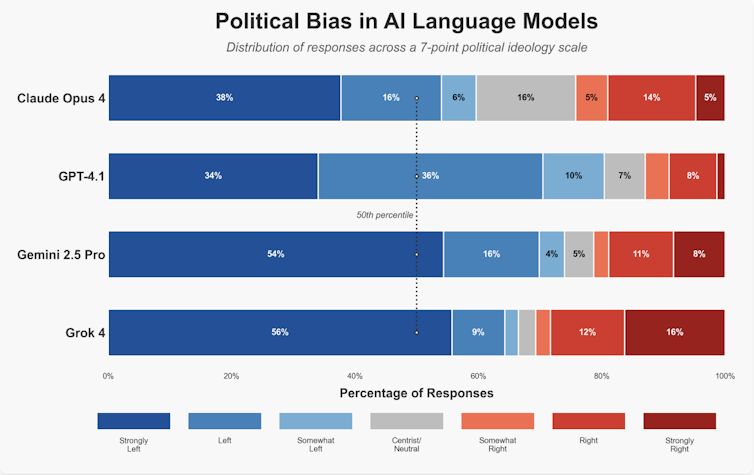

Musk claims that Grok is designed to counter such distortions, however Grok itself has been accused of bias. The take a look at, which requested 4 primary language fashions 2,500 political questions, seems to turn that Grok is extra politically impartial than its opponents, however nonetheless has a reasonably left-wing bias (the others are extra left-wing).

Michael D’Angelo/Promptfoo, CC BI-SA

If the fashion underlying Grokipedia depends on the similar information and algorithms, it is laborious to consider how an AI-driven encyclopedia may just steer clear of replicating the biases that Musk criticizes Wikipedia for. Extra critically, LLMs may just spotlight the issue. They perform on a probabilistic foundation, predicting the in all probability upcoming phrase or word according to statistical regularities, relatively than thru human deliberation. The result’s what researchers name an “illusion of consensus”: a reaction that sounds authoritative however mask uncertainty or variety of opinion.

Consequently, LLMs have a tendency to homogenize political variety and want majority viewpoints to the detriment of minorities. Those methods subsequently possibility reworking collective wisdom right into a easy however superficial narrative. When bias is hidden underneath easy prose, readers would possibly not even understand that different views exist.

Do not throw the child out with the bathtub water

Moreover, AI too can improve a venture like Wikipedia. Synthetic intelligence equipment already assist discover vandalism, recommend resources or establish inconsistencies in articles. Contemporary analysis displays that automation can toughen accuracy if used transparently and underneath human supervision.

AI may just additionally facilitate the switch of information between other linguistic editions and produce the neighborhood of participants nearer in combination. Correctly carried out, it will make Wikipedia extra inclusive, environment friendly, and responsible with out denying its human-centered ethos.

In the similar approach that Wikipedia can be informed from synthetic intelligence, Platform X may just be informed from Wikipedia’s consensus development fashion. Group notes permit customers to indicate and fee feedback on posts, however their design limits direct discussions between participants.

Some other analysis venture I participated in confirmed that deliberation-based methods, impressed by means of Wikipedia communicate pages, toughen accuracy and believe between contributors, together with when deliberation comes to each people and AI. Selling discussion, relatively than just vote casting sure or no, would make Communities of Word extra clear, pluralistic, and immune to political polarization.

Benefit and motivation

The deeper distinction between Wikipedia and Growikipedia lies of their goal and, no doubt, their financial fashion. Wikipedia is controlled by means of the non-profit Wikimedia Basis, and maximum of its volunteers are motivated essentially by means of the general public pastime. By contrast, kAI, X and Grokipedia are industrial firms.

Even though the pursuit of cash in isn’t inherently immoral, it may well distort incentives. When X began promoting his blue test verification, credibility become a commodity, now not an indication of believe. If wisdom is monetized in a similar fashion, bias may just change into extra pronounced, formed by means of what generates probably the most engagement and income.

Actual development does now not lie in forsaking human cooperation, however in making improvements to it. Those that understand bias on Wikipedia, together with Musk himself, may just give a contribution additional by means of encouraging the participation of editors from numerous political, cultural, and demographic backgrounds—or by means of becoming a member of collective efforts to toughen present articles themselves. In an age an increasing number of marked by means of incorrect information, transparency, variety and open debate stay our best possible equipment for purchasing nearer to the reality.